Robot brayl şriftini insanlardan iki dəfə sürətlə oxumağa öyrədilib

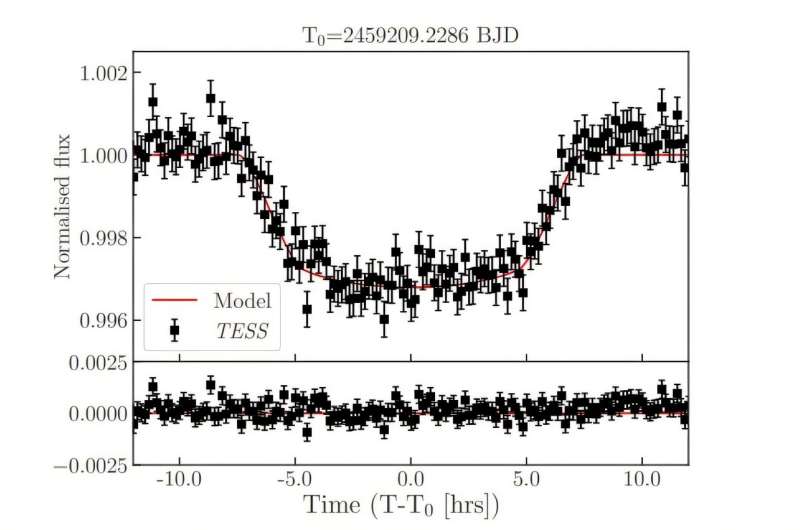

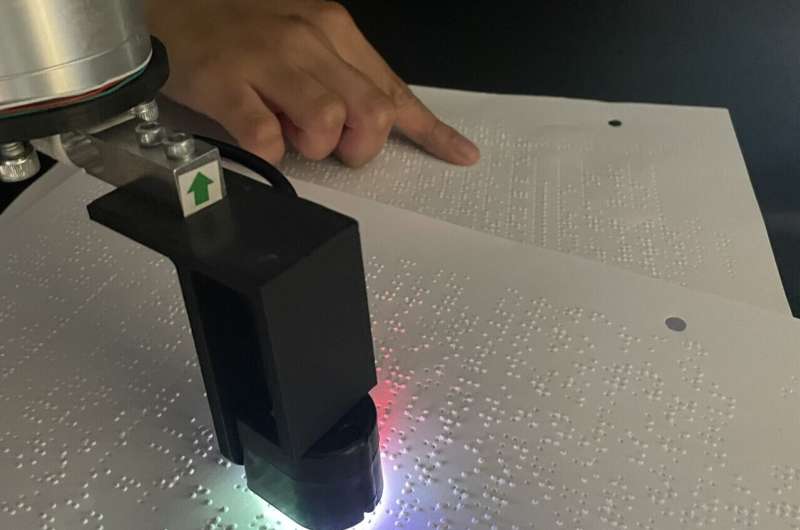

The research team, from the University of Cambridge, used machine learning algorithms to teach a robotic sensor to quickly slide over lines of braille text. The robot was able to read the braille at 315 words per minute with close to 90% accuracy.

Although the robot braille reader was not developed as an assistive technology, the researchers say the high sensitivity required to read braille makes it an ideal test in the development of robot hands or prosthetics with comparable sensitivity to human fingertips. The results are reported in the journal IEEE Robotics and Automation Letters.

Human fingertips are remarkably sensitive and help us gather information about the world around us. Our fingertips can detect tiny changes in the texture of a material or help us know how much force to use when grasping an object: for example, picking up an egg without breaking it or a bowling ball without dropping it.

Reproducing that level of sensitivity in a robotic hand, in an energy-efficient way, is a big engineering challenge. In Professor Fumiya Iida’s lab in Cambridge’s Department of Engineering, researchers are developing solutions to this and other skills that humans find easy, but robots find difficult.

https://www.youtube.com/embed/pXz2e5HBnXo?color=whiteResearchers have developed a robotic sensor that incorporates artificial intelligence techniques to read braille at speeds roughly double that of most human readers. (Note: this video is at 2x speed). Credit: University of Cambridge

“The softness of human fingertips is one of the reasons we’re able to grip things with the right amount of pressure,” said Parth Potdar from Cambridge’s Department of Engineering and an undergraduate at Pembroke College, the paper’s first author. “For robotics, softness is a useful characteristic, but you also need lots of sensor information, and it’s tricky to have both at once, especially when dealing with flexible or deformable surfaces.”

https://googleads.g.doubleclick.net/pagead/ads?gdpr=0&us_privacy=1—&gpp_sid=-1&client=ca-pub-0536483524803400&output=html&h=135&slotname=2793866484&adk=675901022&adf=1873531024&pi=t.ma~as.2793866484&w=540&fwrn=4&lmt=1711610336&rafmt=11&format=540×135&url=https%3A%2F%2Ftechxplore.com%2Fnews%2F2024-01-robot-braille-humans.html&wgl=1&uach=WyJXaW5kb3dzIiwiMTUuMC4wIiwieDg2IiwiIiwiMTIyLjAuNjI2MS4xMjkiLG51bGwsMCxudWxsLCI2NCIsW1siQ2hyb21pdW0iLCIxMjIuMC42MjYxLjEyOSJdLFsiTm90KEE6QnJhbmQiLCIyNC4wLjAuMCJdLFsiR29vZ2xlIENocm9tZSIsIjEyMi4wLjYyNjEuMTI5Il1dLDBd&dt=1711610333835&bpp=4&bdt=561&idt=409&shv=r20240326&mjsv=m202403210101&ptt=9&saldr=aa&abxe=1&cookie=ID%3Dd8c6cdc5123375cd%3AT%3D1709623025%3ART%3D1711610160%3AS%3DALNI_MY2ynj5TDpMXqOZBx7W90OihbbXuw&gpic=UID%3D00000d6971a748b6%3AT%3D1709623025%3ART%3D1711610160%3AS%3DALNI_MaTILJ6PYHOKRZlSvHcKJ4LkDsnLQ&eo_id_str=ID%3D34d5e14efb6a7c5d%3AT%3D1709623025%3ART%3D1711610160%3AS%3DAA-Afjbw5XrDrmZOIEp3UV8fgvCO&prev_fmts=0x0%2C1519x695&nras=2&correlator=261307025232&frm=20&pv=1&ga_vid=1833901760.1709623018&ga_sid=1711610334&ga_hid=103492093&ga_fc=1&rplot=4&u_tz=240&u_his=1&u_h=864&u_w=1536&u_ah=816&u_aw=1536&u_cd=24&u_sd=1.25&dmc=8&adx=395&ady=2244&biw=1519&bih=695&scr_x=0&scr_y=0&eid=44759876%2C44759927%2C44759837%2C31082034%2C31082175%2C95320378%2C95328825%2C31078663%2C31078665%2C31078668%2C31078670&oid=2&pvsid=2646575429069502&tmod=1002168777&uas=0&nvt=3&ref=https%3A%2F%2Ftechxplore.com%2Fpage8.html&fc=1920&brdim=0%2C0%2C0%2C0%2C1536%2C0%2C1536%2C816%2C1536%2C695&vis=1&rsz=%7C%7CpeEbr%7C&abl=CS&pfx=0&fu=128&bc=31&bz=1&td=1&psd=W251bGwsbnVsbCwibGFiZWxfb25seV8xIiwxXQ..&nt=1&ifi=2&uci=a!2&btvi=1&fsb=1&dtd=2464

Braille is an ideal test for a robot ‘fingertip’ as reading it requires high sensitivity, since the dots in each representative letter pattern are so close together. The researchers used an off-the-shelf sensor to develop a robotic braille reader that more accurately replicates human reading behavior.

“There are existing robotic braille readers, but they only read one letter at a time, which is not how humans read,” said co-author David Hardman, also from the Department of Engineering. “Existing robotic braille readers work in a static way: they touch one letter pattern, read it, pull up from the surface, move over, lower onto the next letter pattern, and so on. We want something that’s more realistic and far more efficient.”

The robotic sensor the researchers used has a camera in its ‘fingertip,’ and reads by using a combination of the information from the camera and the sensors. “This is a hard problem for roboticists as there’s a lot of image processing that needs to be done to remove motion blur, which is time and energy-consuming,” said Potdar.

The team developed machine learning algorithms so the robotic reader would be able to ‘deblur’ the images before the sensor attempted to recognize the letters. They trained the algorithm on a set of sharp images of braille with fake blur applied. After the algorithm had learned to deblur the letters, they used a computer vision model to detect and classify each character.

Once the algorithms were incorporated, the researchers tested their reader by sliding it quickly along rows of braille characters. The robotic braille reader could read at 315 words per minute at 87% accuracy, which is twice as fast and about as accurate as a human Braille reader.

“Considering that we used fake blur the train the algorithm, it was surprising how accurate it was at reading braille,” said Hardman. “We found a nice trade-off between speed and accuracy, which is also the case with human readers.”

“Braille reading speed is a great way to measure the dynamic performance of tactile sensing systems, so our findings could be applicable beyond braille, for applications like detecting surface textures or slippage in robotic manipulation,” said Potdar.

In future, the researchers are hoping to scale the technology to the size of a humanoid hand or skin.

More information: Parth Potdar et al, High-Speed Tactile Braille Reading via Biomimetic Sliding Interactions, IEEE Robotics and Automation Letters (2024). DOI: 10.1109/LRA.2024.3356978

Journal information: IEEE Robotics and Automation Letters

Provided by University of Cambridge